Trustless Verification of Machine Learning

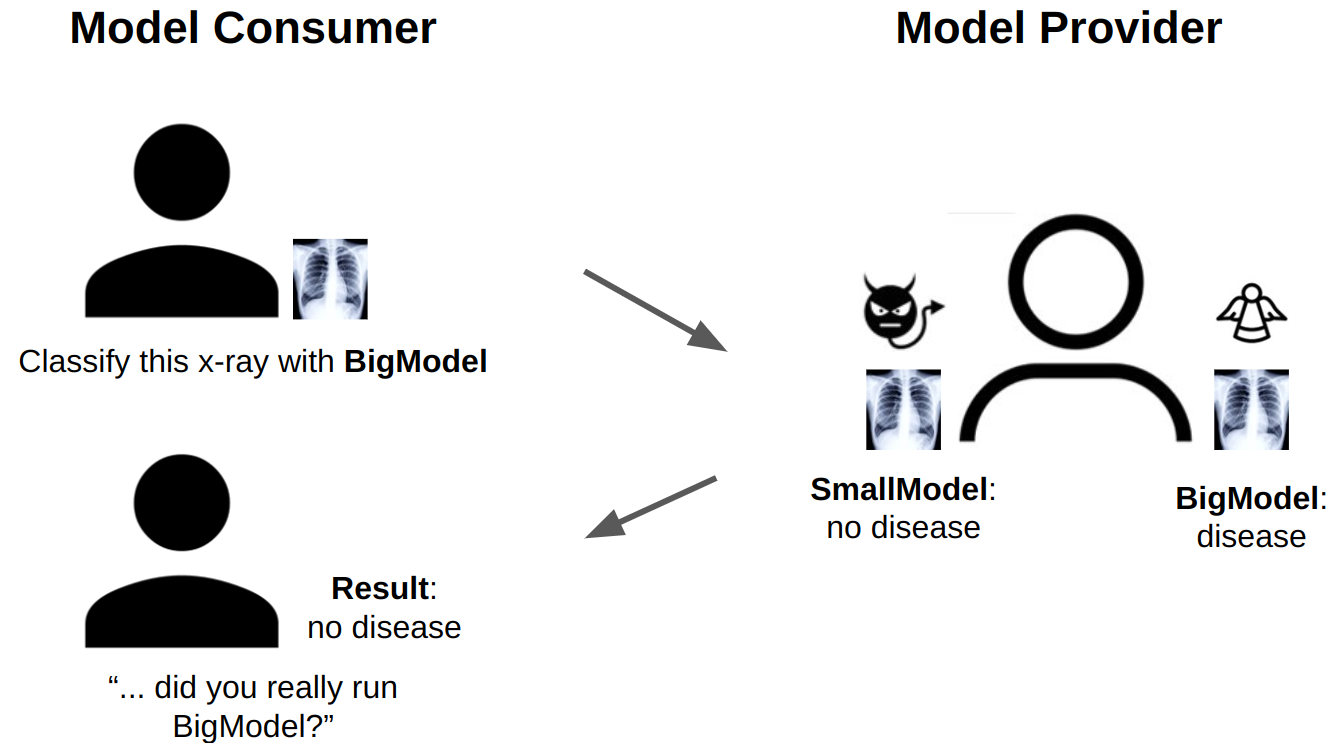

Machine learning (ML) deployments are becoming increasingly complex as ML increases in its scope and accuracy. Many organizations are now turning to “ML-as-a-service” (MLaaS) providers (e.g., Amazon, Google, Microsoft, etc.) to execute complex, proprietary ML models. As these services proliferate, they become increasingly difficult to understand and audit. Thus, a critical question emerges: how can consumers of these services trust that the service has correctly served the predictions?

To address these concerns, we have developed the first system to trustlessly verify ML model predictions for production-level models. In order to do so, we use a cryptographic technology called ZK-SNARKs (zero-knowledge succinct non-interactive argument of knowledge), which allow a prover to prove the result of a computation without revealing any information about the inputs or intermediate steps of the computation. ZK-SNARKs allow an MLaaS provider to prove that the model was executed correctly post-hoc, so model consumers can verify predictions as they wish. Unfortunately, existing work on ZK-SNARKs can require up to two days of computation to verify a single ML model prediction.

In order to address this computational overhead, we have created the first ZK-SNARK circuit of a model on ImageNet (MobileNet v2) achieving 79% accuracy while being verifiable in 10 seconds on commodity hardware. We further construct protocols to use these ZK-SNARKs to verify ML model accuracy, ML model predictions, and trustlessly retrieve documents in cost-efficient ways.

In this blog post, we’ll describe our protocols and how we constructed the ZK-SNARK circuit in more detail. Further details are also in our preprint.

Using ZK-SNARKs for trustless applications

Building on our efficient ZK-SNARKs, we also show that it’s possible to use these ZK-SNARKs for a variety of applications. We show how to use ZK-SNARKS to verify ML model accuracy. In addition, we also show that ZK-SNARKs of ML models can be used to trustlessly retrieve images (or documents) matching an ML model classifier. Importantly, these protocols can be verified by third-parties, so can be used for resolving disputes.

First, consider the setting where a model provider (MP) has a model they wish to serve to a model consumer (MC). The MC wants to verify the model accuracy to ensure that the MP is not malicious, lazy, and or erroneous (i.e., has bugs in the serving code). To verify model accuracy, the model provider (MP) will commit to a model by hashing its weights. The model consumer (MC) will then send a test set to the MP, on which the MP will provide outputs and ZK-SNARK proofs of correct execution. By verifying ZK-SNARKs on the test set, MC can be confident that MP has executed the model correctly. After the model accuracy is verified, MC can purchase the model or use the MP as an MLaaS provider. In order to ensure that both parties are honest, we design a set of economic incentives, with details in our preprint.

We instantiated our protocol with our ZK-SNARKs. To verify accuracy of an ML model within 5% costs $99.93. For context, collecting an expert-annotated dataset can cost as much $85,000. Our protocol adds as little as 0.1% overhead in this scenario.

Second, consider the setting where a judge has ordered a legal subpoena. This may occur when a plaintiff requests documents for legal discovery or when a journalist requests documents under the Freedom of Information Act (FOIA). When the judge approves the subpoena, the responder must divulge documents or images matching the request, which can be specified by an ML model. In order to keep the remainder of the documents private, the requester can only divulge the specific documents as follows. The responder commits to the dataset by producing hashes of the documents, the requester subsequently sends the model to the responder, and finally the responder produces ZK-SNARK proofs of valid inference on the documents. The responder will send only the documents that match the ML model classifier.

Constructing the first ImageNet-scale ZK-SNARK

As we mentioned, ZK-SNARKs allow a prover to prove the result of a computation without revealing any information about the inputs or intermediate steps of the computation. ZK-SNARKs have several non-intuitive properties that make them amenable for verifying ML models:

- They have succinct proofs, which can be as few as 100 bytes (for non-ML applications) and as few as 6 kB for the ML models we consider.

- They are non-interactive, so the proof can be verified by anyone at any point in time.

- A prover cannot generate invalid proofs (knowledge soundness) and correct proofs will verify (completeness).

- They are zero-knowledge: the proof doesn’t reveal anything about the inputs (the model weights or model inputs in our setting) beyond the information already contained in the outputs. Constructing most ZK-SNARKs involves two steps: arithmetization (turning the computation into an arithmetic circuit, i.e., a system of polynomial equations over a large prime field) and using a cryptographic proof system to generate the ZK-SNARK proof.

To construct ZK-SNARKs for ML models that work at ImageNet-scale, we use recent developments in proving systems, which have dramatically improved in efficiency and usability in the past few years. We specifically use the halo2 library. Prior work for DNN ZK-SNARKs uses the Groth16 proving system or sum-check based systems specific to neural networks. Groth16 is less amenable for DNN inference due to the non-linearities as they cannot easily be represented with quadratic constraints. Furthermore, neural network-specific proving systems do not benefit from the broader ZK proving ecosystem. They currently have worse performance than our solution of leveraging the advances in the ZK ecosystem.

Scaling out ZK-SNARKs in halo2 still requires several advances in efficient arithmetization. We design new methods of arithmetizating quantized DNNs, performing non-linearities efficiently via lookup arguments, and efficiently packing the circuits. Please see our preprint for more details!

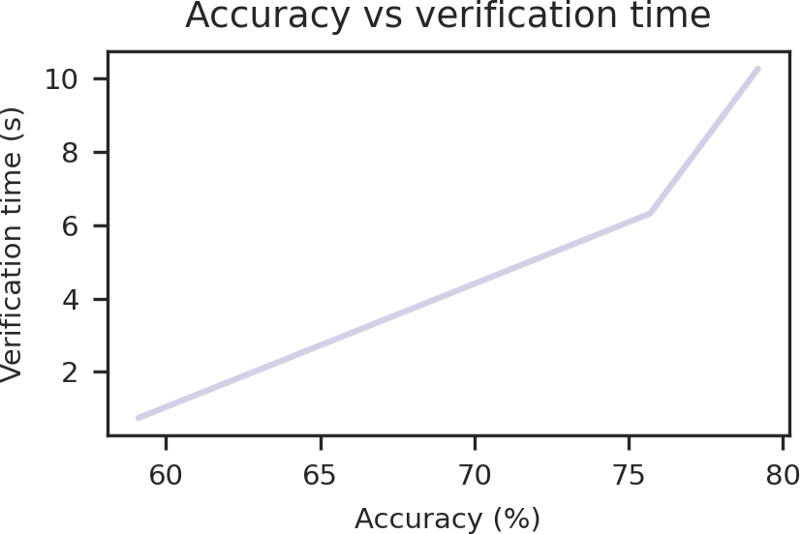

We constructed ZK-SNARKs of MobileNet v2 on ImageNet. By varying the complexity of the models, we can trade off accuracy against verification time. We constructed ZK-SNARK proofs for inference for a variety of models and show the empirical tradeoff of accuracy and verification time in the plot below:

As we can see, our models can achieve an accuracy of 79% while taking only 10s to verify on commodity hardware!

Conclusion

In our recent paper, we’ve scaled ZK-SNARKs on ML models that achieve high accuracy on ImageNet. Our ZK-SNARKs constructions can achieve 79% accuracy while being verifiable within 10s. We’ve also described protocols for using these ZK-SNARKs to verify ML model accuracy and trustlessly retrieve documents. Please see our preprint for more information. Also be on the lookout for our open-source release!