Open-sourcing zkml: Trustless Machine Learning for All

We’re excited to announce the open-source release of zkml, our framework for producing zero-knowledge proofs of ML model execution. zkml builds on our earlier paper on scaling zero-knowledge proofs to ImageNet models but contains many improvements for usability, functionality, and scalability. With our improvements, we can verify execution of models that achieve 92.4% accuracy on ImageNet, a 13% improvement compared to our initial work! zkml can also prove an MNIST model with 99% accuracy in four seconds.

In this post, we’ll describe our vision for zkml and how to use zkml. In future posts, we’ll describe several applications of zkml in detail, including trustless audits, decentralized prompt marketplaces, and privacy-preserving face ID. We’ll also describe the technical challenges and details behind zkml. In the meantime, check out our open-source code!

Why do we need trustless machine learning?

Over the past few years, we’ve seen two inescapable trends: more of our world moving online and ML/AI methods becoming increasingly powerful. These ML/AI technologies have enabled new forms of art and incredible productivity increases… However, these technologies are increasingly concealed behind closed APIs.

Although these providers want to protect trade secrets, we want to have assurances of their models: that the training data doesn’t contain copyrighted material or that it isn’t biased. We also want assurances that a specific model was executed in high-stakes scenarios, such as medical industries.

In order to do so, the model provider can take two steps: commit to a model trained on a hidden dataset, and provide audits of the hidden dataset after training. In the first step, the model provider releases proofs of training on a given dataset and a commitment to the weights at the end of the process. Importantly, the weights can be kept hidden! By doing so, any third party can be assured that the training happened honestly. Then, the audit can be done using zero-knowledge proofs over the hidden data.

We have been imagining a future where ML models can be executed trustlessly. As we’ll describe in future posts, trustless execution of ML models will enable a range of applications:

- Trustless audits of ML-powered applications, such as proving that no copyrighted images were used in a training dataset, as we described above.

- Verification that specific ML models were run by ML-as-a-service providers for regulated industries.

- Decentralized prompt marketplaces for generative AI, where creators can sell access to their prompts.

- Privacy-preserving biometric authentication, such as enabling smart contracts to use face ID.

And many more!

ZK-SNARKs for trustless ML

In order to trustlessly execute ML models, we can turn to powerful tools in cryptography. We focus on ZK-SNARKs (zero-knowledge succinct non-interactive argument of knowledge), which are tools that allow a prover to prove an arbitrary computation was done correctly using a short proof. ZK-SNARKs also have the amazing property that the inputs and intermediate variables (e.g., activations) can be hidden!

In the context of ML, we can use a ZK-SNARK to prove that a model was executed correctly on a given input, while hiding the model weights, inputs, and outputs. We can further choose to selectively reveal any of the weights, inputs, or outputs depending on the application at hand.

With this powerful primitive, we can enable trustless audits and all the other applications we described above!

zkml: a first step towards trustless ML

As a first step towards trustless ML model execution for all, we’ve open-sourced zkml. To use zkml, consider proving the execution of an MNIST model by producing a ZK-SNARK. Using zkml, we can run the following commands:

# Installs rust, skip if you already have rust installed

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

git clone https://github.com/ddkang/zkml.git

cd zkml

rustup override set nightly

cargo build --release

mkdir params_kzg

# This should take ~8s to run the first time and ~4s to run the second time

./target/release/time_circuit examples/mnist/model.msgpack examples/mnist/inp.msgpack kzg

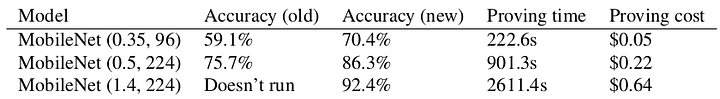

On a regular laptop, the proving time is as little as 4 seconds and consumes ~2GB of RAM. We’re also the first framework to be able to compute ZK-SNARKs at ImageNet scale. As a sneak preview, we can achieve non-trivial accuracy on ImageNet in under 4 minutes and 92.4% in under 45 minutes of proving:

We’ve increased accuracy by 13%, decreased proving cost by 6x, and decreased verification times by 500x compared to our initial work!

Our primary focus for zkml is high efficiency. Existing approaches are resource-intensive, taking days to prove small models, many gigabytes of RAM, or producing large proofs. We’ll describe how zkml works under the hood in future posts.

We believe that efficiency is critical because it enables the future where anyone can execute ML trustlessly and we’ll continue pushing towards that goal. Currently, models like GPT-4 and Stable Diffusion are out of reach and we hope to change that soon!

Furthermore, zkml can enable trustless audits and all of the other applications we’ve mentioned! In addition to performance improvements, we’ve also been working on new features, including enabling proofs of training and trustless audits. We’ve also been adding features for models beyond vision models.

And there’s much more…

In this post, we’ve described our vision of zkml and how to use zkml. Check it out yourself!

There’s still a lot of work to be done to improve zkml. In the meantime, join our Telegram group to discuss your ideas for improving or uzing zkml. You can check out our code directly on GitHub, but we’d also love to hear your ideas for how to build with zkml. If you’d like to discuss your idea or brainstorm with us, fill out this form. We’ll be developing actively on the GitHub and we’re happy to accept contributions!

In upcoming posts, we’ll describe how to use zkml to:

- Perform trustless audits of ML-powered applications

- Build decentralized prompt marketplaces for generative AI

- Enable privacy-preserving biometric ID

We’ll also describe the inner workings of zkml and its optimizations. Stay tuned!

Thanks to Ion Stoica, Tatsunori Hashimoto, and Yi Sun for comments on this post.